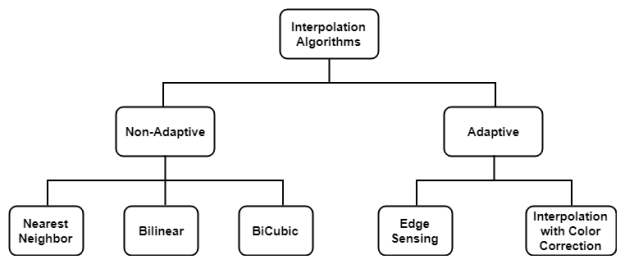

In the last blog, we discussed what is Bi-linear interpolation and how it is performed on images. In this blog, we will learn Bi-cubic interpolation in detail.

Note: We will be using some concepts from the Nearest Neighbour and Bilinear interpolation blog. Check them first before moving forward.

Difference between Bi-linear and Bi-cubic:

- Bi-linear uses 4 nearest neighbors to determine the output, while Bi-cubic uses 16 (4×4 neighbourhood).

- Weight distribution is done differently.

So, the only thing we need to know is how weights are distributed and rest is same as Bi-linear.

In OpenCV, weights are distributed according to the following code (whole code can be found here)

|

1 2 3 4 5 6 |

const float A = -0.75f; coeffs[0] = ((A*(x + 1) - 5*A)*(x + 1) + 8*A)*(x + 1) - 4*A; coeffs[1] = ((A + 2)*x - (A + 3))*x*x + 1; coeffs[2] = ((A + 2)*(1 - x) - (A + 3))*(1 - x)*(1 - x) + 1; coeffs[3] = 1.f - coeffs[0] - coeffs[1] - coeffs[2]; |

x used in the above code is calculated from below code where x = fx

|

1 2 3 |

fx = (float)((dx+0.5)*scale_x - 0.5); sx = cvFloor(fx); fx -= sx; |

Similarly, for y, replace x with fy and fy can be obtained by replacing dx and scale_x in the above code by dy and scale_y respectively (Explained in the previous blog).

Note: For Matlab, use A= -0.50

Let’s see an example. We take the same 2×2 image from the previous blog and want to upscale it by a factor of 2 as shown below

Steps:

- In the last blog, we calculated for P1. This time let’s take ‘P2’. First, we find the position of P2 in the input image as we did before. So, we find P2 coordinate as (0.75,0.25) with dx = 1 and dy=0.

- Because cubic needs 4 pixels (2 on left and 2 on right) so, we pad the input image.

- OpenCV has different methods to add borders which you can check here. Here, I used cv2.BORDER_REPLICATE method. You can use any. After padding the input image looks like this

- To find the value of P2, let’s first visualize where P2 is in the image. Yellow is the input image before padding. We take the blue 4×4 neighborhood as shown below

- For P2, using dx and dy we calculate fx and fy from code above. We get, fx=0.25 and fy=0.75

- Now, we substitute fx and fy in the above code to calculate the four coefficients. Thus we get coefficients = [-0.0351, 0.2617,0.8789, -0.1055] for fy =0.75 and for fx=0.25 we get coefficients = [ -0.1055 , 0.8789, 0.2617, -0.0351]

- First, we will perform cubic interpolation along rows( as shown in the above figure inside blue box) with the above calculated weights for fx as

-0.1055 *10 + 0.8789*10 + 0.2617*20 -0.0351*20 = 12.265625

-0.1055 *10 + 0.8789*10 + 0.2617*20 -0.0351*20 = 12.265625

-0.1055 *10 + 0.8789*10 + 0.2617*20 -0.0351*20 = 12.265625

-0.1055 *30 + 0.8789*30 + 0.2617*40 -0.0351*40 = 32.265625 - Now, using above calculated 4 values, we will interpolate along columns using calculated weights for fy as

-0.0351*12.265 + 0.2617*12.265 + 0.8789*12.265 -0.1055*32.625 = 10.11702 - Similarly, repeat for other pixels.

The final result we get is shown below:

This produces noticeably sharper images than the previous two methods and balances processing time and output quality. That’s why it is used widely (e.g. Adobe Photoshop etc.)

In the next blog, we will see these interpolation methods using OpenCV functions on real images. Hope you enjoy reading.

If you have any doubt/suggestion please feel free to ask and I will do my best to help or improve myself. Good-bye until next time.